Deploying RAFT-Stereo in ROS2 on a NVIDIA Jetson Xavier AGX and an OAKD-Lite Camera

Abstract⌗

With the OAKD-Lite an affordable stereo camera is available that can be used as a starting point for robotic projects. While the accompanying software stack DepthAI is powerful, the onboard resources of the camera are limited and the 3D reconstruction results are often not satisfying. On the other hand we have powerful depth estimation algorithms such as deep stereo networks readily available1. As the camera also comes along with ROS packages and interfaces, nothing is stopping us from extending the hardware of the camera with a more powerful GPU and deploying a method like the RAFT-Stereo network2. In this post we will cover the process of setting up the toolchain for a NVIDIA jetson as well as a ROS node to run a deep stereo network on the images captured by the OAKD-Lite camera. All source code is available at this repository.

Table of contents⌗

- Setup Jetson

- Installing dependencies

- Building the base container

- Creating the development container

- Setting up IDE

- Implementing the ROS node

- Launching & Testing

Preliminaries⌗

For this setup I used a Jetson Xavier AGX, the OAKD-Lite camera the RAFT stereo network, pytorch:2.0.0+nv23.05 and ROS:humble. A great resource to check out different stereo networks offline is the stereodemo1 python package.

Setup Jetson⌗

- Setup your jetson following the official documentation

- Log in to your jetson

We keep the modifications on the Jetson native OS minimal but its not going to work completely without. We’ll need to configure some permissions and USB rules to have access to the OAK-D camera:

echo 'SUBSYSTEM=="usb", ATTRS{idVendor}=="03e7", MODE="0666"' | sudo tee /etc/udev/rules.d/80-movidius.rules

sudo usermod -aG video $(whoami)

sudo reboot # or just log out and log in again

Installing Dependencies⌗

We will need some dependencies installed before we can write our node. Mainly we need:

- ROS2 as a message passing framework

- Pytorch to run the network inference

- DepthAI drivers to connect to our camera in simple way.

We want to keep an isolated system and avoid dependency and build headaches, therefore we will work in a docker container. This also prevents messing up the system installation and we can easily set back the system in case sth goes wrong. In this readme we find a good descripton to configure docker on the Jetson.

Unfortunately, we cannot simply install all packages the same way we do on a laptop. Most prebuilt binaries are only available for x86 architecture, while on Jetson we have an ARM architecture. Hence, a couple of packages need to be compiled in particular for / on the Jetson. As I have already done this steps you can use my container as a base and skip right through to Creating the development container. If you are interested in doing the process yourself / you want to do some customizations and you have some time. Feel free to continue reading here.

Building the base container⌗

Our first goal will be to create build container that has all the dependencies. Luckily there is a tool available that simplifies the process.

sudo apt-get update && sudo apt-get install git python3-pip

git clone --depth=1 https://github.com/dusty-nv/jetson-containers

cd jetson-containers

pip3 install -r requirements.txt

Now we can build a docker image with ros and pytorch installed. This will take quite some time so in case you are connected via ssh maybe it’s worth using a screen.

sudo apt install screen && screen # only if ssh

export ROS_DISTRO=humble

./build.sh --name=ros-$ROS_DISTRO-base-l4t-pytorch-onnx ros:$ROS_DISTRO-ros-base l4t-pytorch onnx

This will basically build separate images for each of the “packages” and finally combine them to one container. It’s quite useful as it saves us messing will all the compile flags, versions etc to build these libraries ourselves. However, I had a couple of build errors when running it. It helped checking out the individual Dockerfiles within the packages/ folder. Luckily, the intermediate containers are stored so we don’t have to start from scratch each time.

Finally wheren everything is build I recommend storing the docker image in a safe place as the docker harbor. Create a user account and push the image with:

export ROS_DISTRO=humble

export DOCKER_HARBOR_USERNAME=$USER #change accordingly

docker login ..

docker tag $ROS_DISTRO-base-l4t-pytorch-onnx $DOCKER_HARBOR_USERNAME/jetson-$ROS_DISTRO-base-l4t-pytorch-onnx

docker push $DOCKER_HARBOR_USERNAME/jetson-$ROS_DISTRO-base-l4t-pytorch-onnx

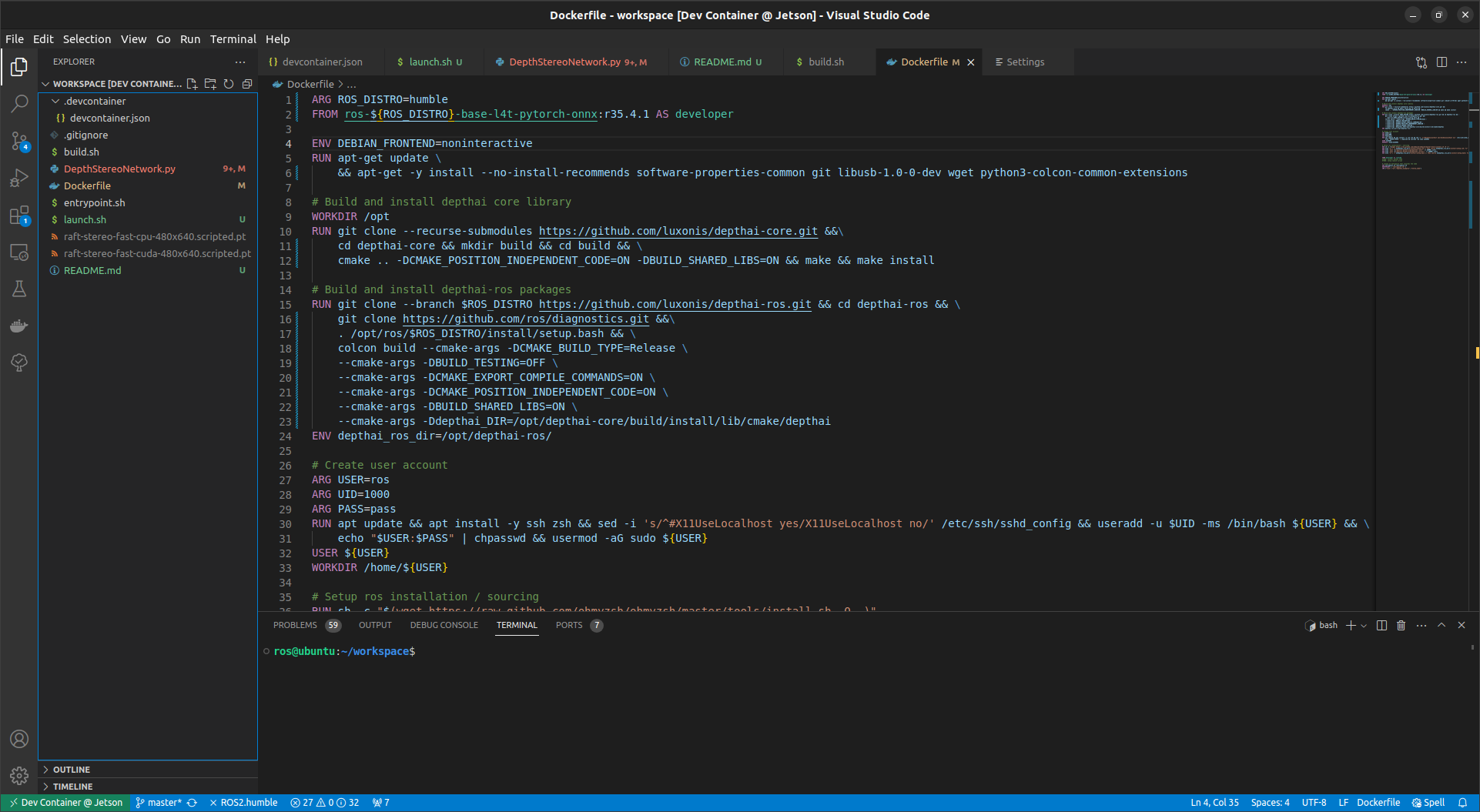

Creating the development container⌗

In case you haven’t build the base container yourself, pull it first from the docker harbor:

docker pull phildue/jetson-ros-humble-base-l4t-pytorch-onnx

docker tag phildue/jetson-ros-humble-base-l4t-pytorch-onnx jetson-ros-humble-base-l4t-pytorch-onnx

Unfortunately we are not done with dependencies yet, we still need the depth-ai drivers. So from now on we work on our own Dockerfile which extends the container we build in the previous step.

ARG ROS_DISTRO=humble

FROM ros-$ROS_DISTRO-base-l4t-pytorch-onnx:r35.4.1 AS developer

ENV DEBIAN_FRONTEND=noninteractive

RUN apt-get update \

&& apt-get -y install --no-install-recommends software-properties-common git libusb-1.0-0-dev wget python3-colcon-common-extensions

# Build and install depthai core library

WORKDIR /opt

RUN git clone --recurse-submodules https://github.com/luxonis/depthai-core.git &&\

cd depthai-core && mkdir build && cd build && \

cmake .. -DCMAKE_POSITION_INDEPENDENT_CODE=ON -DBUILD_SHARED_LIBS=ON && make && make install

# Build and install depthai-ros packages

RUN git clone --branch $ROS_DISTRO https://github.com/luxonis/depthai-ros.git && cd depthai-ros && \

git clone https://github.com/ros/diagnostics.git &&\

. /opt/ros/$ROS_DISTRO/install/setup.bash && \

colcon build --cmake-args -DCMAKE_BUILD_TYPE=Release \

--cmake-args -DBUILD_TESTING=OFF \

--cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON \

--cmake-args -DCMAKE_POSITION_INDEPENDENT_CODE=ON \

--cmake-args -DBUILD_SHARED_LIBS=ON \

--cmake-args -Ddepthai_DIR=/opt/depthai-core/build/install/lib/cmake/depthai

ENV depthai_ros_dir=/opt/depthai-ros/

# Create user account

ARG USER=ros

ARG UID=1000

ARG PASS=pass

RUN apt update && apt install -y ssh zsh && sed -i 's/^#X11UseLocalhost yes/X11UseLocalhost no/' /etc/ssh/sshd_config && useradd -u $UID -ms /bin/bash ${USER} && \

echo "$USER:$PASS" | chpasswd && usermod -aG sudo ${USER}

USER ${USER}

WORKDIR /home/${USER}

# Setup ros installation / sourcing

RUN sh -c "$(wget https://raw.github.com/ohmyzsh/ohmyzsh/master/tools/install.sh -O -)"

RUN echo "if [ -f ${depthai_ros_dir}/install/setup.zsh ]; then source ${depthai_ros_dir}/install/setup.zsh; fi" >> $HOME/.zshrc

RUN echo 'eval "$(register-python-argcomplete3 ros2)"' >> $HOME/.zshrc

RUN echo 'eval "$(register-python-argcomplete3 colcon)"' >> $HOME/.zshrc

RUN echo "if [ -f ${depthai_ros_dir}/install/setup.bash ]; then source ${depthai_ros_dir}/install/setup.bash; fi" >> $HOME/.bashrc

Let’s create a project directory “jetson_oakd_stereo” and place this “Dockerfile” inside. Then we can execute the build:

docker build . --target developer -t jetson-oakd-stereo:dev \

--build-arg UID=$(id -u)

This will again take a couple of minutes and until the docker image is built.

Finally, we have a docker image that contains all the dependencies that we need compiled for the Jetson-ARM architecture.

Setting up IDE⌗

My preferred development setup is the following host: vscode + remote containers extension –ssh–> jetson: vscode-server + devcontainers extension –> devcontainer: dependencies.

This allows to work conveniently from the laptop but still in an encapsulated environment. For the setup we mainly need to install vscode on the host and the remote containers extension. Then we can ssh to the Jetson which will automatically install vscode-server. In the remote instance we can then install the devcontainers extension. Finally, we create subfolder in the project folder (jetson-oakd-stereo), “.devcontainers” and a file “devcontainers.json” with following content:

// See https://aka.ms/vscode-remote/devcontainer.json for format details.

{

"image": "jetson-oakd-stereo:dev",

"workspaceMount": "source=${localWorkspaceFolder}/,target=/home/ros/workspace,type=bind",

"workspaceFolder": "/home/ros/workspace",

"remoteUser": "ros",

"runArgs": [

"--network=host",

"--cap-add=SYS_PTRACE",

"--security-opt=seccomp:unconfined",

"--security-opt=apparmor:unconfined",

"--volume=/tmp/.X11-unix:/tmp/.X11-unix",

"--privileged",

"--runtime=nvidia",

"-v","/dev/bus/usb:/dev/bus/usb",

"--device-cgroup-rule","c 189:* rmw"

],

"containerEnv": { "DISPLAY": "${localEnv:DISPLAY}" },

// Set *default* container specific settings.json values on container create.

"mounts":[

],

"customizations": {

"vscode": {

"extensions": [

"DavidAnson.vscode-markdownlint",

"dotjoshjohnson.xml",

"ms-python.python",

],

"settings": {

"terminal.integrated.profiles.linux": {

"bash": {

"path": "bash"

},

},

"terminal.integrated.defaultProfile.linux": "bash"

}

}

}

}

This will configure the development container we are about to start. Open the command palette (Ctrl+P) and select “Open Folder in container…”.

After a short setup phase our container should be ready. You can check it by running “ros2 in a terminal. It should look something like this;

ros@ubuntu:~/workspace$ ros2

usage: ros2 [-h] [--use-python-default-buffering] Call `ros2 <command> -h` for more detailed usage. ...

ros2 is an extensible command-line tool for ROS 2.

...

Implementing the ROS node⌗

For our system we will use the raft-stereo network2. It takes two camera images as an input and predicts a disparity image for both.

The constructor⌗

Let’s start with the constructor we will:

- Declare some parameters

- Download the respective JIT script of the model and move it to the GPU

- Prepare publishers and subscribers

class DepthStereoNetwork(Node):

"""A ROS2 node for running a deep stereo network."""

def __init__(self):

"""Initialize the node.

"""

super().__init__('depth_stereo_network')

self._declare_parameters()

model_path = Path(

f'{self._model_name}-{self._device}-{self._height}x{self._width}.scripted.pt'

)

if not model_path.exists():

self._download_model(urls[model_path.name], model_path)

assert model_path.exists()

self.net = torch.jit.load(model_path)

self.net.eval()

torch.no_grad()

self.net = self.net.to(torch.device(self._device))

self._bridge = CvBridge()

self.pub_disp = self.create_publisher(DisparityImage, '/disparity', 10)

self.pub_depth = self.create_publisher(Image, '/depth', 10)

self.pub_pcl = self.create_publisher(PointCloud2, '/points2', 10)

camera_info_left = message_filters.Subscriber(

self, CameraInfo, 'left/camera_info'

)

image_left = message_filters.Subscriber(self, Image, 'left/image_rect')

camera_info_right = message_filters.Subscriber(

self, CameraInfo, 'right/camera_info'

)

image_right = message_filters.Subscriber(

self, Image, 'right/image_rect')

ts = message_filters.ApproximateTimeSynchronizer(

[camera_info_left, image_left, camera_info_right, image_right],

1,

0.1,

allow_headerless=True,

)

ts.registerCallback(self.callback)

self.get_logger().info('Node ready.')

...

Next to some calibration parameters such as the valid depth ranges, we control the network resolution via the parameters. This gives us a simple method to increase the inference speed.

def _declare_parameters(self):

self._max_depth = (

self.declare_parameter(

'max_depth', 20.0).get_parameter_value().double_value

)

self._min_depth = (

self.declare_parameter(

'min_depth', 0.01).get_parameter_value().double_value

)

self._delta_d = (

self.declare_parameter(

'delta_d', 1).get_parameter_value().integer_value

)

self._width = (

self.declare_parameter(

'width', 640).get_parameter_value().integer_value

)

self._height = (

self.declare_parameter(

'height', 480).get_parameter_value().integer_value

)

self._model_name = (

self.declare_parameter('model_name', 'raft-stereo-fast')

.get_parameter_value()

.string_value

)

self._device = (

self.declare_parameter(

'device', 'cuda').get_parameter_value().string_value

)

assert self._device in ['cpu', 'cuda']

With a given image resolution we can download the corresponding model. Luckily nburrus was nice enough to export various scripted models of RAFT and upload them to github.

def _download_model(model_path: Path):

urls = {

'raft-stereo-fast-cuda-128x160.scripted.pt': 'https://github.com/nburrus/stereodemo/releases/download/v0.1-raft-stereo/raft-stereo-fast-cuda-128x160.scripted.pt',

'raft-stereo-fast-cuda-256x320.scripted.pt': 'https://github.com/nburrus/stereodemo/releases/download/v0.1-raft-stereo/raft-stereo-fast-cuda-256x320.scripted.pt',

'raft-stereo-fast-cuda-480x640.scripted.pt': 'https://github.com/nburrus/stereodemo/releases/download/v0.1-raft-stereo/raft-stereo-fast-cuda-480x640.scripted.pt',

'raft-stereo-fast-cuda-736x1280.scripted.pt': 'https://github.com/nburrus/stereodemo/releases/download/v0.1-raft-stereo/raft-stereo-fast-cuda-736x1280.scripted.pt',

}

url = urls[model_path.name]

filename = model_path.name

with tempfile.TemporaryDirectory() as d:

tmp_file_path = Path(d) / filename

print(f'Downloading {filename} from {url} to {model_path}...')

urllib.request.urlretrieve(url, tmp_file_path)

shutil.move(tmp_file_path, model_path)

The image callback⌗

In the constructor we declared the callback that is triggered when new images arrive. Now we need to define that callback. For each image we will:

- Preprocess the image, move it to the GPU

- Run the inference

- Postprocess the image and move it to the CPU

- Publish the disparity as ROS message

- Transform the disparity to depth and publish it as image message

- Reconstruct the camera point cloud and publish it as point cloud message

def callback(self, msg_cam_info_l, msg_img_l, msg_cam_info_r, msg_img_r):

"""Estimate disparity from a given pair of images and publish the result.

(1) Preprocess both images and move it to the GPU

(2) Run the inference

(3) Move the result back to the CPU and publish as a disparity msg / depth map msg / point cloud msg

"""

self.get_logger().info(

f'Image recevied: {msg_img_l.height},{msg_img_l.width} | {msg_img_r.height},{msg_img_r.width}'

)

t0 = time.time()

img_l = self._bridge.imgmsg_to_cv2(msg_img_l)

img_r = self._bridge.imgmsg_to_cv2(msg_img_r)

tensor_l = self._preprocess(np.asarray(img_l))

tensor_r = self._preprocess(np.asarray(img_r))

t1 = time.time()

outputs = self.net(tensor_l, tensor_r)

t2 = time.time()

disparity = self._postprocess(outputs, target_shape=img_l.shape)

focal_length = msg_cam_info_l.k[0]

baseline = -msg_cam_info_l.p[3]/focal_length

self.pub_disp.publish(self._create_disparity_msg(

disparity, msg_img_l.header, focal_length=focal_length,

baseline=baseline))

# https://github.com/princeton-vl/RAFT-Stereo#converting-disparity-to-depth

cxl = msg_cam_info_l.k[2]

cxr = msg_cam_info_r.k[2]

depth = baseline*focal_length/np.abs(disparity + cxl - cxr)

self.pub_depth.publish(self._bridge.cv2_to_imgmsg(

depth, header=msg_img_l.header))

xyzi = self._reconstruct(

depth, np.array(msg_cam_info_l.k).reshape(3, 3), img_l)

self.pub_pcl.publish(self._create_pcl_msg(

xyzi, msg_img_l.header, 'xyzi'))

self.get_logger().info(

f'[{msg_img_l.header.stamp.sec}.{msg_img_l.header.stamp.nanosec}] Total: {time.time() - t0}s, Inference: {t2 - t1}s')

Pre- and postprocessing mainly involves providing the correct shape and size of the image to the network. E.g. toch uses channel first ordering. In case we want to work on lower resolutions we need to downsample the input and upsample the network output.

I noticed a black stripe in the left image of my OAK-D Lite which led to strange depth estimates. Masking that region improved the results.

def _preprocess(self, img: np.ndarray):

# [H,W,C] -> [H,W,CCC] -> [CCC,H,W]

# Normalization done in the model itself.

img = cv.cvtColor(img, cv.COLOR_GRAY2RGB)

img = torch.from_numpy(img).permute(

2, 0, 1).unsqueeze(0).float().to(torch.device(self._device))

return F.interpolate(

img, size=(self._height, self._width), mode='area')

def _postprocess(self, outputs, target_shape):

# [N,d,H,W] -> [H,W,d]

disparity = (

outputs[1].detach() * -1.0

)

if disparity.shape[:2] != target_shape:

disparity = F.interpolate(disparity, size=(

target_shape[0], target_shape[1]), mode='area')

x_scale = target_shape[1] / float(self._width)

disparity *= np.float32(x_scale)

disparity = disparity.squeeze().cpu().numpy()

# mask out image regions with black stripe FIXME

disparity[:, -40:] = np.nan

disparity[-20:] = np.nan

In order to create a point cloud from the estimated depth we reproject all pixel coordinates with the inverse camera matrix and scale by the depth:

def _reconstruct(self, Z, K, I):

# xyz = z * K^-1 * uv1

uv = np.dstack(np.meshgrid(

np.arange(Z.shape[1]), np.arange(Z.shape[0]))).reshape(-1, 2)

uv1 = np.ones((uv.shape[0], 3))

uv1[:, :2] = uv

xyz = (Z.reshape((-1, 1)) * (np.linalg.inv(K) @

uv1.T).T).reshape(Z.shape[0], Z.shape[1], 3)

intensity = I[uv[:, 1], uv[:, 0]].reshape(Z.shape[0], Z.shape[1], 1)

xyz[xyz[:, :, 2] <= 0] = np.nan

return np.dstack([xyz, intensity])

Also, we need to implement the convinience functions to create the correct messages for ROS:

def _create_disparity_msg(self, disparity, header, focal_length, baseline):

msg_disp_l = DisparityImage()

msg_disp_l.header = header

msg_disp_l.image = self._bridge.cv2_to_imgmsg(

disparity, header=header)

msg_disp_l.f = focal_length

msg_disp_l.t = baseline

msg_disp_l.min_disparity = focal_length * baseline/self._max_depth

msg_disp_l.max_disparity = focal_length * baseline/self._min_depth

msg_disp_l.delta_d = self._delta_d

def _create_pcl_msg(self, points, header, fields='xyz'):

ros_dtype = sensor_msgs.msg.PointField.FLOAT32

dtype = np.float32

itemsize = np.dtype(dtype).itemsize

data = points.astype(dtype).tobytes()

fields = [sensor_msgs.msg.PointField(

name=n, offset=i*itemsize, datatype=ros_dtype, count=1)

for i, n in enumerate(fields)]

return sensor_msgs.msg.PointCloud2(

header=header,

height=points.shape[0],

width=points.shape[1],

is_dense=False,

is_bigendian=False,

fields=fields,

point_step=(itemsize * len(fields)),

row_step=(itemsize * len(fields) * points.shape[1]),

data=data

)

Finally we can add the main function to launch and spin the node.

def main(args=None):

""" Main function to create, spin and destroy the node."""

rclpy.init(args=args)

node = DepthStereoNetwork()

rclpy.spin(node)

node.destroy_node()

rclpy.shutdown()

if __name__ == '__main__':

main()

Now we are done with the python node. However, we will also need a publisher for the depthai drivers that will publish the left and right image, as well as the camera parameters. There is no simple node available in the depthai-ros pakage but for the start we can use the stereo_node example. Our launch file (launch.sh) can look like the following:

ros2 run depthai_examples stereo_node &

ros2 run stereo_image_proc point_cloud_node --ros-args --remap /disparity:=/left/disparity &

python3 stereo_network_node.py

killall stereo_node

Launching & Testing⌗

We can launch the pipeline by running (in the development container):

./launch.sh

We should see some prints on the command line. In my case the first two frames always take around 5 seconds, from then on the node runs at about 2 Hz.

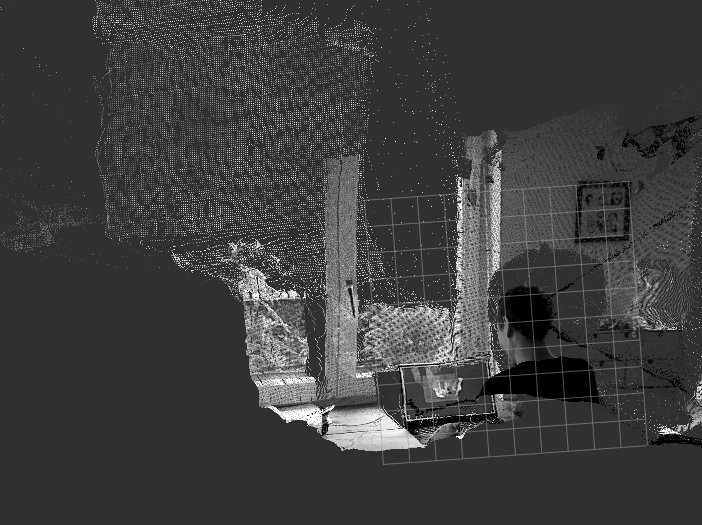

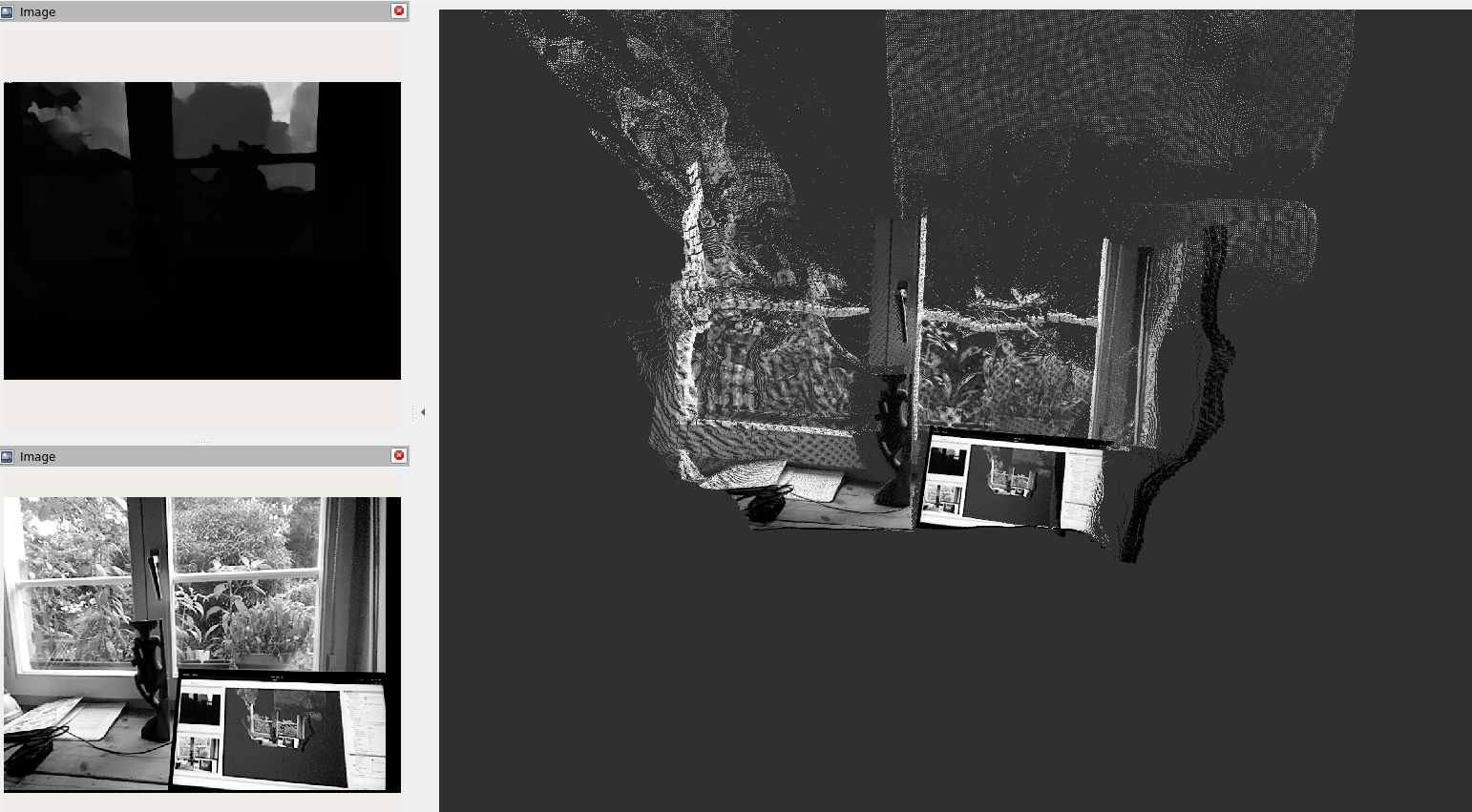

On our host system we can now visually inspect the result in RVIZ. Open the tool and subscribe to the topic /points2. Set the fixed frame to “oak_left_camera_optical_frame” and change the intensity channel to “i” and you should see the intensity impainted point cloud similar to one below:

To this end the network runs relatively slow. A quick fix to increase the inference speed to almost 4 hz is reducing the resolution the network is running on:

#!/bin/bash

ros2 run depthai_examples stereo_node --ros-args -p camera_model:=OAK-D-LITE &

python3 stereo_network_node.py --ros-args -p height:=128 -p width:=160

killall stereo_node

Congratulations! You deployed a stereo network to a NVIDIA jetson and it is publishing its messages via ROS2.

Summary⌗

In a nutshell we did the following:

- Creating a base container with required frameworks compiled for ARM-architecture

- Creating a development container including the ROS drivers for the oakd-lite camera

- Implementing a ROS node to run the model inference of a deep stereo network

- Setting up a launch script to run a depth from stereo estimation pipeline

We could now proceed by having additional nodes that make use of the data, e.g. an obstacle detection or a 3D mapping algorithm.

Unfortunately, the current rate will be too slow for most applications. We will have to do some runtime optimization. possibly by implementing the node in C++, running all nodes in a single process, quantizing the network and/or using a different deployment runtime.

Topics like this we will cover in future posts. Stay tuned!