Lightweight, automated, controlled replay in ROS2

Abstract⌗

This post describes a lightweight approach to implement a controlled replayer in ROS2. All source code is available here.

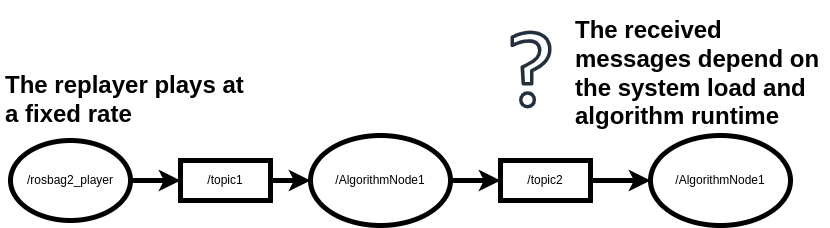

The problem: Unsynchronized replay⌗

Anyone who has ever seriously developed in ROS2 will know the issue: We would like to debug our pipeline or improve tiny parts of the algorithm but as we are repeatedly running the pipeline we are getting different results. If we are in a early phase of development and our pipeline is not running fast enough yet to process all data in real time, we end up loosing messages. If we put a breakpoint in the code, we end up loosing messages. If we have something completely unrelated but semi-heavy processing happening on the same machine in the background, we end up loosing messages. Worst of it all, the symptoms can vary between each run from complete system failure to minimal affect on the results so that we often we don’t realize directly that the loss of messages could be the issue.

We could replay all the data really slowly of course. However, then we end up waiting for ever until the replay has reached the point we are interested in. Similarly, if we would like to run the pipeline on a CI-server we often don’t know how much computational resources are available and how fast we can run our system.

In summary: The replayer replays its messages at a fixed rate which might be either to fast or to slow for the algorithm pipeline. Thus we either loose time or have undetermenistic results.

The approach: A controlled replay⌗

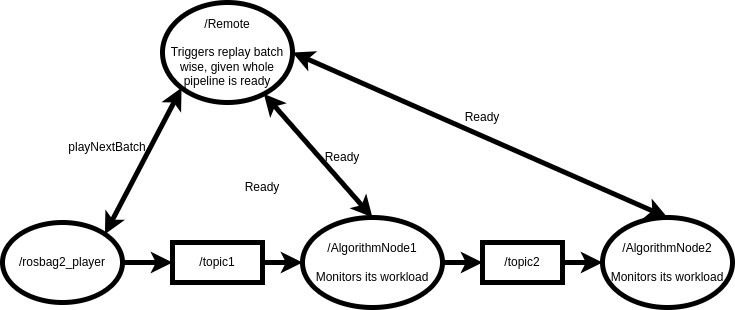

The basic idea to solve this issue is pretty simple: We need a controlled replay that takes into account the current state of the pipeline. However, due to ROS2s asynchronous and loosely-coupled design this it is not so trivial to achieve. Especially because we don’t want to clutter the whole system with mechanisms that are “only” needed for development.

Unfortunately, ROS2 has not an in build solution for this (in my opinion very obvious) problem. Of course it is open source and we could go about and implement our own ros bag replayer. However, as mentioned above we would like to develop an algorithm within the framework. We don’t want to implement the framework first. Luckily, by now the ROS2 tools have evolved quite a bit and with relatively little effort we can apply a sort of workaround that can work well enough in practice.

The concept is to have one additional node that acts like a remote control for the replayer. It triggers the replay of new messages whenever all nodes inform the remote that they are ready.

The implementation⌗

The implementation can be done relatively straight forward using a service-client architecture, which is described in the following. All code including a small example can be found here. It can also be included easily as a ros package.

Remote⌗

The remote is an additional node that acts like a proxy to the rosbag2_replayer. It uses the “Burst” service to trigger the next N messages every T seconds and waits for confirmation by each node. A custom service is implemented do transmit the “Ready” signal along side the identity of the caller. Unfortunately, the replayer does not shut down automatically when we “skip” through the bag using the burst call. Hence, an additional timer regularly checks if the simulation time is still increasing to determine whether the replay has ended. This requires the replayer to be publishing a clock (–clock) and the remote to be run with the use-sim-time parameter set. The node can then shutdown itself / its whole composition when the replay has ended.

We can include it as a composable node or start it via:

ros2 run controllable_replay remote --ros-args -p use_sim_time:=True -p batch_size:=10 -p period:=0.01 -p automatic_shutdown:=5

This will play 10 messages every 10 milliseconds and shut down the node after 5 seconds of inactivity by the replayer.

Algorithm Node⌗

On the algorithm side we need some logic to inform the remote control that we are ready. In simple nodes we can add this at the end of each callback. In more complex nodes there might be not a one to one mapping between messages and callbacks. In this case need some additional logic to monitor the workload of a node e.g. by observing the input queue size.

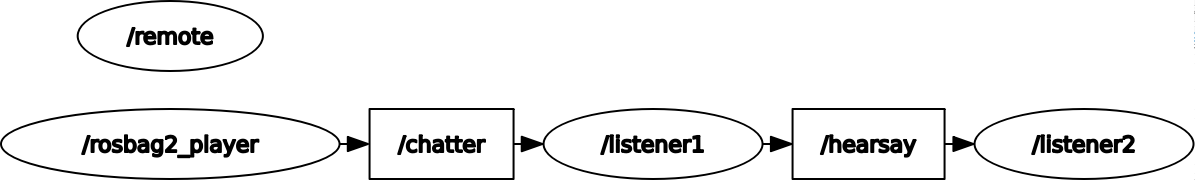

For a simple example we will create a string listener node that counts the number of messages received and simulates a heavy task with a certain time length. After task completion it will publish its own message and inform the remote that it is ready.

#include "Listener.h"

using namespace std::chrono_literals;

namespace controlled_replay_example {

Listener::Listener(const rclcpp::NodeOptions& options) :

rclcpp::Node("Listener", options),

_cliReady{create_client<controlled_replay_interfaces::srv::Ready>("/ready")},

_pub{create_publisher<std_msgs::msg::String>("/hearsay", 10)},

_sub{create_subscription<std_msgs::msg::String>(

"/chatter",

10,

[&](std_msgs::msg::String::ConstSharedPtr msg) {

_ctr++;

std::this_thread::sleep_for(get_parameter("task_time").as_double() * 1000ms); // heavy task

auto rq = std::make_shared<controlled_replay_interfaces::srv::Ready::Request>();

rq->isready = true;

_cliReady->async_send_request(rq); // inform replayer

})},

_timer{create_wall_timer(1s, [&]() { RCLCPP_INFO(get_logger(), "Received messages: %ld", _ctr); })} {

declare_parameter("task_time", 1.0);

}

} // namespace controlled_replay_example

#include "rclcpp_components/register_node_macro.hpp"

RCLCPP_COMPONENTS_REGISTER_NODE(controlled_replay_example::Listener)

Testing⌗

We test the above example with a bag containing 464 string messages at a rate of 100hz. We will simulate an algorithm pipeline that contains two chained nodes with varying time length. The first node will run at 100hz but the second one only at 2hz. Thus theoretically the first node should be able to process all messages but the second one will miss some.

Run I⌗

In the first run we will simply run the pipeline without remote.

#!/bin/bash

ros2 run controlled_replay_example listener --ros-args -p task_time:=0.01 -r __node:=listener1 &

ros2 run controlled_replay_example listener --ros-args -p task_time:=0.5 -r __node:=listener2 -r chatter:=hearsay -r hearsay:=hearsay1&

ros2 bag play bag

Results⌗

[INFO] [1695973373.033875499] [listener2]: Received messages: 8

[INFO] [1695973373.732552274] [listener1]: Received messages: 445

We can see even the first node lost some messages. However, the first one only processed 8!.

Run II⌗

In the second run we will run the pipeline with the remote.

#!/bin/bash

ros2 run controlled_replay_example listener --ros-args -p task_time:=0.01 -r __node:=listener1 &

ros2 run controlled_replay_example listener --ros-args -p task_time:=0.5 -r __node:=listener2 -r chatter:=hearsay -r hearsay:=hearsay1&

ros2 run controlled_replay remote --ros-args -p batch_size:=1 -p use_sim_time:=True &

ros2 bag play --clock --start-paused bag

Results⌗

[INFO] [1695973373.033875499] [listener2]: Received messages: 464

[INFO] [1695973373.732552274] [listener1]: Received messages: 464

We can see both nodes have received all messages.

Conclusion⌗

We have seen a simple method to get controllable replay in ROS2. By adding an additional remote node we can encapsulate the majority of this task away from the actual pipeline. The remote control slows down the replay speed to whatever the node can process and thus avoids loosing messages.

The remote as seen here is available here and can be included via ROS2 package management.

Open Issues & Future Work⌗

- Soon the replayer should be available as a composable node. I am hoping this will further increase the replay speed. It should work smoothly with the above implementation.

- When using the services the ros bag replayer spams the terminal with messages for each service and I didn’t find a way to supress the logging a part from redirecting it to /dev/null. However, this surpresses all output by the replayer

- It would be nice to find even easier ways to monitor the workload of the nodes, maybe even externally. If we could somehow access the pending callbacks for example this would already help, but I didnt find a way to do this.